Recent Advances in the Application of Artificial Intelligence in Otorhinolaryngology-Head and Neck Surgery

Article information

Abstract

This study presents an up-to-date survey of the use of artificial intelligence (AI) in the field of otorhinolaryngology, considering opportunities, research challenges, and research directions. We searched PubMed, the Cochrane Central Register of Controlled Trials, Embase, and the Web of Science. We initially retrieved 458 articles. The exclusion of non-English publications and duplicates yielded a total of 90 remaining studies. These 90 studies were divided into those analyzing medical images, voice, medical devices, and clinical diagnoses and treatments. Most studies (42.2%, 38/90) used AI for image-based analysis, followed by clinical diagnoses and treatments (24 studies). Each of the remaining two subcategories included 14 studies. Machine learning and deep learning have been extensively applied in the field of otorhinolaryngology. However, the performance of AI models varies and research challenges remain.

INTRODUCTION

Artificial intelligence (AI) refers to the ability of machines to mimic human intelligence without explicit programming; AI can solve tasks that require complex decision-making [1,2]. Recent advances in computing power and big data handling have encouraged the use of AI to aid or substitute for conventional approaches. The results of AI applications are promising, and have attracted the attention of researchers and practitioners. In 2015, some AI applications began to outperform human intelligence: ResNet performed better than humans in the ImageNet Large Scale Visual Recognition Competition 2015 [3], and AlphaGo became the first computer Go program to beat a professional Go player in October 2015 [4]. Such technical advances have promising implications for medical applications, particularly because the amount of medical data is doubling every 73 days in 2020 [5]. As such, it is expected that AI will revolutionize healthcare because of its ability to handle data at a massive scale. Currently, AI-based medical platforms support diagnosis, treatment, and prognostic assessments at many healthcare facilities worldwide. The applications of AI include drug development, patient monitoring, and personalized treatment. For example, IBM Watson is a pioneering AI-based medical technology platform used by over 230 organizations worldwide. IBM Watson has consistently outperformed humans in several case studies. In 2016, IBM Watson diagnosed a rare form of leukemia by referring to a dataset of 20 million oncology records [6]. It is clear that the use of AI will fundamentally revolutionize medicine. Frost and Sullivan (a research company) forecast that AI will boost medical outcomes by 30%–40% and reduce treatment costs by up to 50%. The AI healthcare market is expected to attain a value of USD 31.3 billion by 2025 [7].

Otorhinolaryngologists use many instruments to examine patients. Since the early 1990s, AI has been increasingly used to analyze radiological and pathological images, audiometric data, and cochlear implant (CI). Performance [8-10]. As various methods of AI analysis have been developed and refined, the practical scope of AI in the otorhinolaryngological field has been broadened (e.g., virtual reality technology [11-13]). Therefore, it is essential for otorhinolaryngologists to understand the capabilities and limitations of AI. In addition, a data-driven approach to healthcare requires clinicians to ask the right questions and to fit well into interdisciplinary teams [8].

Herein, we review the basics of AI, its current status, and future opportunities for AI in the field of otorhinolaryngology. We seek to answer two questions: “Which areas of otorhinolaryngology have benefited most from AI?” and “ What does the future hold?”

MACHINE LEARNING AND DEEP LEARNING

AI has fascinated medical researchers and practitioners since the advent of machine learning (ML) and deep learning (DL) (two forms of AI) in 1990 and 2010, respectively. A flowchart of the literature search and study selection is presented in Fig. 1. Importantly, AI, ML, and DL overlap (Fig. 2). There is no single definition of AI; its purpose is to automate tasks that generally require the application of human intelligence [14]. Such tasks include object detection and recognition, visual understanding, and decision-making. Generally, AI incorporates both ML and DL, as well as many other techniques that are difficult to map onto recognized learning paradigms. ML is a data-driven technique that blends computer science with statistics, optimization, and probability [15]. An ML algorithm requires (1) input data, (2) examples of correct predictions, and (3) a means of validating algorithm performance. ML uses input data to build a model (i.e., a pattern) that allows humans to draw inferences [16,17]. DL is a subfield of ML, in which tens or hundreds of representative layers are learned with the aid of neural networks. A neural network is a learning structure that features several neurons; when combined with an activation function, a neural network delivers non-linear predictions. Unlike traditional ML algorithms, which typically only extract features, DL processes raw data to define the representations required for classification [18]. DL has been incorporated in many AI applications, including those for medical purposes [19]. The applications of DL thus far include image classification, speech recognition, autonomous driving, and text-to-speech conversion; in these domains, the performance of DL is at least as good as that of humans. Given the significant roles played by ML and DL in the medical field, clinicians must understand both the advantages and limitations of data-driven analytical tools.

AI IN THE FIELD OF OTORHINOLARYNGOLOGY

AI aids medical image-based analysis

Medical imaging yields a visual representation of an internal bodily region to facilitate analysis and treatment. Ear, nose, and throat-related diseases are imaged in various manners. Table 1 summarizes the 38 studies that used AI to assist medical image-based analysis in clinical otorhinolaryngology. Nine studies (23.7%) addressed hyperspectral imaging, nine studies (23.7%) analyzed computed tomography, six studies (15.8%) applied AI to magnetic resonance imaging, and one study (2.63%) analyzed panoramic radiography. Laryngoscopic and otoscopic imaging were addressed in three studies each (7.89% each). The remaining seven studies (18.39%) used AI to aid in the analysis of neuroimaging biomarker levels, biopsy specimens, simulated Raman scattering data, ultrasonography and mass spectrometry data, and digitized images. Nearly all AI algorithms comprised convolutional neural networks. Fig. 3 presents a schematic diagram of the application of convolutional neural networks in medical image-based analysis; the remaining algorithms consisted of support vector machines and random forests.

AI aids voice-based analysis

The subfield of voice-based analysis within otorhinolaryngology seeks to improve speech, to detect voice disorders, and to reduce the noise experienced by patients with (CIs; Table 2 lists the 14 studies that used AI for speech-based analyses. Nine (64.29%) sought to improve speech intelligibility or reduce noise for patients with CIs. Two (14.29%) used acoustic signals to detect voice disorders [67] and “hot potato voice” [70]. In other studies, AI was used for symptoms, voice pathologies, or electromyographic signals as a way to detect voice disorders [68,69], or to restore the voice of a patient who had undergone total laryngectomy [71]. Neural networks were favored, followed by k-nearest neighbor methods, support vector machines, and other widely known classifiers (e.g., decision trees and XGBoost). Fig. 4 presents a schematic diagram of the application of convolutional neural networks in medical voice-based analysis.

AI analysis of biosignals detected from medical devices

Medical device-based analyses seek to predict the responses to clinical treatments in order to guide physicians who may wish to choose alternative or more aggressive therapies. AI has been used to assist polysomnography, to explore gene expression profiles, to interpret cellular cartographs, and to evaluate the outputs of non-contact devices. These studies are summarized in Table 3. Of these 14 studies, most (50%, seven studies) focused on analyses of gene expression data. Three studies (21.43%) used AI to examine polysomnography data in an effort to score sleep stages [72,73] or to identify long-term cardiovascular disease [74]. Most algorithms employed ensemble learning (random forests, Gentle Boost, XGBoost, and a general linear model+support vector machine ensemble); this approach was followed by neural networkbased algorithms (convolutional neural networks, autoencoders, and shallow artificial neural networks). Fig. 5 presents a schematic diagram of the application of the autoencoder and the support vector machine in the analysis of gene expression data.

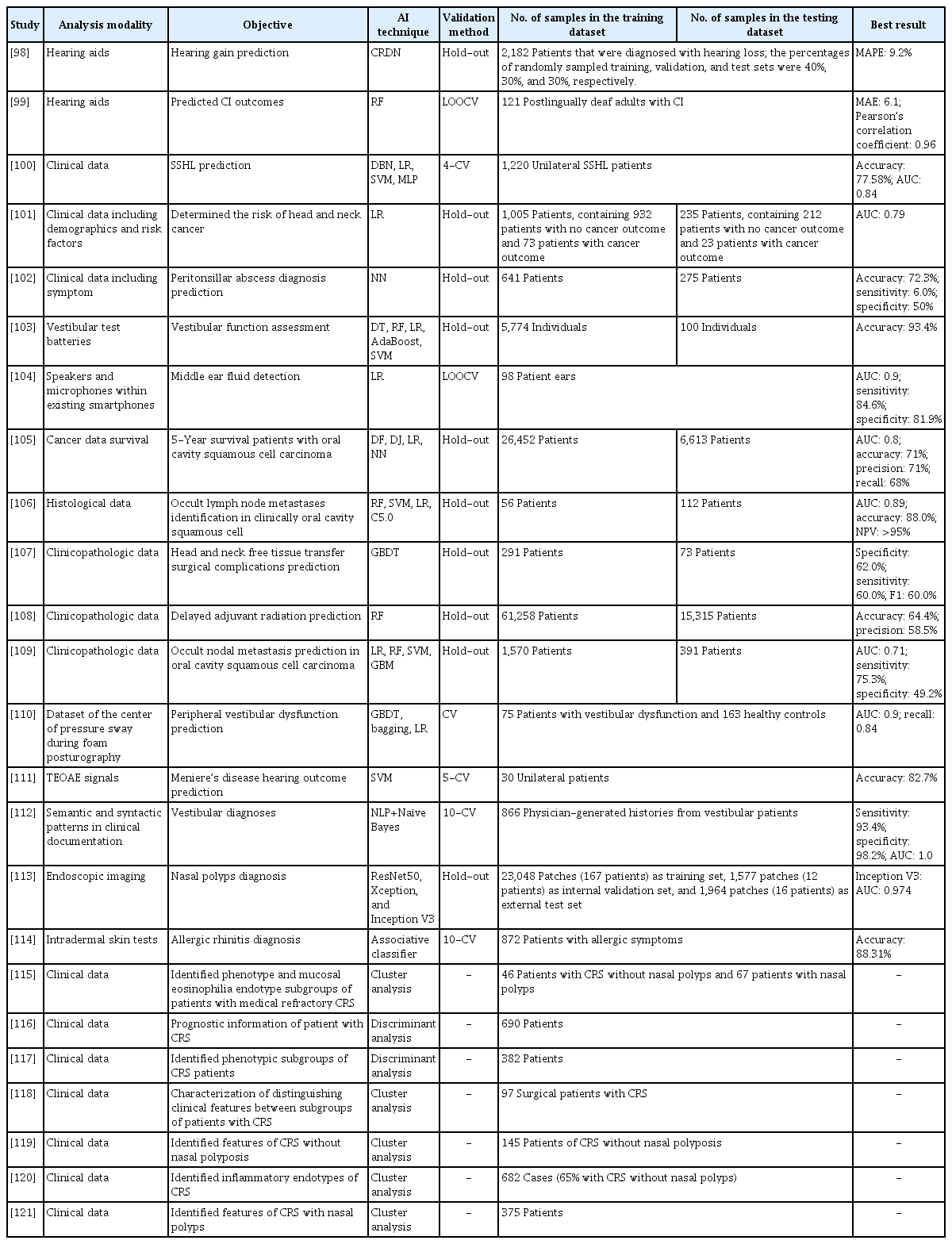

AI for clinical diagnoses and treatments

Clinical diagnoses and treatments consider only symptoms, medical records, and other clinical documentation. We retrieved 24 relevant studies (Table 4). Of the ML algorithms, most used logistic regression for classification, followed by random forests and support vector machines. Notably, many studies used hold-outs to validate new methods. Fig. 6 presents a schematic diagram of the process cycle of utilizing AI for clinical diagnoses and treatments.

DISCUSSION

We systematically analyzed reports describing the integration of AI in the field of otorhinolaryngology, with an emphasis on how AI may best be implemented in various subfields. Various AI techniques and validation methods have found favor. As described above, advances in 2015 underscored that AI would play a major role in future medicine. Here, we reviewed post-2015 AI applications in the field of otorhinolaryngology. Before 2015, most AI-based technologies focused on CIs [10,75-86]. However, AI applications have expanded greatly in recent years. In terms of image-based analysis, images yielded by rigid endoscopes, laryngoscopes, stroboscopes, computed tomography, magnetic resonance imaging, and multispectral narrow-band imaging [38], as well as hyperspectral imaging [45-52,54], are now interpreted by AI. In voice-based analysis, AI is used to evaluate pathological voice conditions associated with vocal fold disorders, to analyze and decode phonation itself [67], to improve speech perception in noisy conditions, and to improve the hearing of patients with CIs. In medical device-based analyses, AI is used to evaluate tissue and blood test results, as well as the outcomes of otorhinolaryngology-specific tests (e.g., polysomnography) [72,73,122] and audiometry [123,124]. AI has also been used to support clinical diagnoses and treatments, decision-making, the prediction of prognoses [98-100,125,126], disease profiling, the construction of mass spectral databases [43,127-129], the identification or prediction of disease progress [101,105,107-110,130], and the confirmation of diagnoses and the utility of treatments [102-104,112,131].

Although many algorithms have been applied, some are not consistently reliable, and certain challenges remain. AI will presumably become embedded in all tools used for diagnosis, treatment selection, and outcome predictions; thus, AI will be used to analyze images, voices, and clinical records. These are the goals of most studies, but again, the results have been variable and are thus difficult to compare. The limitations include: (1) small training datasets and differences in the sizes of the training and test datasets; (2) differences in validation techniques (notably, some studies have not included data validation); and (3) the use of different performance measures during either classification (e.g., accuracy, sensitivity, specificity, F1, or area under the receiver operating characteristic curve) or regression (e.g., root mean square error, least mean absolute error, R-squared, or log-likelihood ratio).

ML algorithms always require large, labeled training datasets. The lack of such data was often a major limitation of the studies that we reviewed. AI-based predictions in the field of otorhinolaryngology must be rigorously validated. Often, as in the broader medical field, an element of uncertainty compromises an otherwise ideal predictive method, and other research disparities were also apparent in the studies that we reviewed. Recent promising advances in AI include the ensemble learning model, which is more intuitive and interpretable than other models; this model facilitates bias-free AI-based decision-making. The algorithm incorporates a concept of “fairness,” considers ethical and legal issues, and respects privacy during data mining tasks. In summary, although otorhinolaryngology-related AI applications were divided into four categories in the present study, the practical use of a particular AI method depends on the circumstances. AI will be helpful for use in real-world clinical treatment involving complex datasets with heterogeneous variables.

CONCLUSION

We have described several techniques and applications for AI; notably, AI can overcome existing technical limitations in otorhinolaryngology and aid in clinical decision-making. Otorhinolaryngologists have interpreted instrument-derived data for decades, and many algorithms have been developed and applied. However, the use of AI will refine these algorithms, and big health data and information from complex heterogeneous datasets will become available to clinicians, thereby opening new diagnostic, treatment, and research frontiers.

HIGHLIGHTS

▪ Ninety studies that implemented artificial intelligence (AI) in otorhinolaryngology were reviewed and classified.

▪ The studies were divided into four subcategories.

▪ Research challenges regarding future applications of AI in otorhinolaryngology are discussed.

Notes

No potential conflict of interest relevant to this article was reported.

AUTHOR CONTRIBUTIONS

Conceptualization: DHK, SWK, SL. Data curation: BAT, DHK, GK. Formal analysis: BAT, DHK, GK. Funding acquisition: DHK, SL. Methodology: BAT, DHK, GK. Project administration: DHK, SWK, SL. Visualization: BAT, DHK, GK. Writing–original draft: BAT, DHK. Writing–review & editing: all authors.

Acknowledgements

This research was supported by the Basic Science Research Program through an NRF grant funded by the Korean government (MSIT) (No. 2020R1A2C1009744), the Bio Medical Technology Development Program of the NRF funded by the Ministry of Science ICT (No. 2018M3A9E8020856), and the Po-Ca Networking Group funded by the Postech-Catholic Biomedical Engineering Institute (PCBMI) (No. 5-2020-B0001-00046).